Visualization Software Architectures for Arts, Humanities,

and STEM Integration

AR, VR, Physicalization,

& Interaction

AR, VR, Physicalization & Interaction

One of the Sculpting Vis Collaborative’s primary thrusts is the intersection of augemnted reality, virtual reality, physicalization, and interactivity. As computing capacity continues to advance, accessibility to big data is also improving. This broadens the potential for visualizations that move beyond two-dimensional screens and into the embodied, physical world. Significant research has demonstrated that tactile and three-dimensional visualizations improve memory, comprehension, and learning potential, especially in lay audiences. The Collaborative is working to explore these promising avenues for visualization through tightly integrated digital + analog methodologies.

Virtual Reality

The image below shows a demonstration at the Texas Advanced Computing Center’s Visualization of the use of virtual reality to view and explore a three-dimensional, multivairate visualization of the Gulf of Mexico, encoded using hand-crafted glyphs and colormaps in the Artifact-Based Rendering system. Here, PI Francesca Samsel and graduate student Claire Fitch assist Michael Dell as he explores the visualization in the VR headset.

Augmented Reality

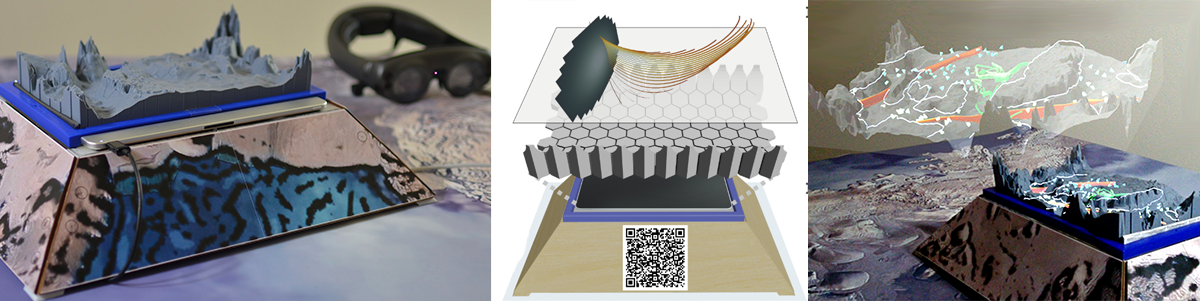

Physical visualizations, or physicalizations, are useful for many tasks such as communication and display, but are often insufficient as reserach tools without the addition of some sort of digital element. The Collaborative is therefore working to integrate augmented reality components with aspects of the physical world in order to broaden their potential for research-oriented use, such as data exploration, interrogation, and group science.

Physicalization

Incorporating physical components into reserach practice provides numerous benefits, including expanded avenues for bringing artists and members of the lay public directly into the visualization process. In the image below, on the upper left, artist Francesca Samsel sculpts glyphs out of clay. These clay glyphs can be scanned into the Artifact-Based Rendering system and used as data-mapped elements in visualizations. The bottom left image shows different types of soil collected from the Arctic as part of The Collaborative’s Climate Prisms project, which centered in part on bridging physical geographical changes and on-the-ground research processes with abstracted data for public consumption. The middle and rightmost images show glass pieces arranged on watercolor paper, created for use in a stop-motion animation to more clearly connect Arctic data with ice movement. The stop-motion will be used alongside climate data visualizations of Arctic icemelt. Physical objects provide opportunities to integrate artistic practice with research praxis and visualization production.

Interaction

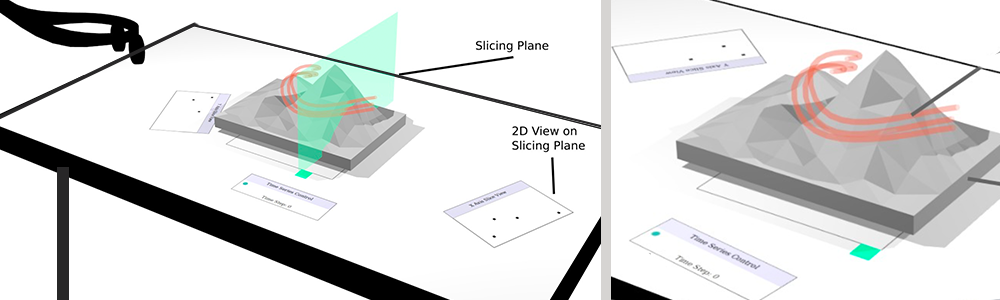

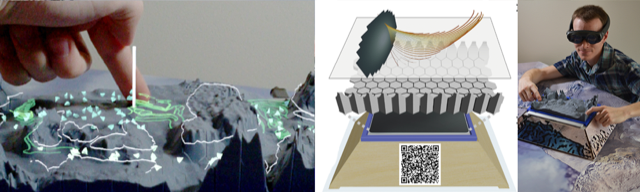

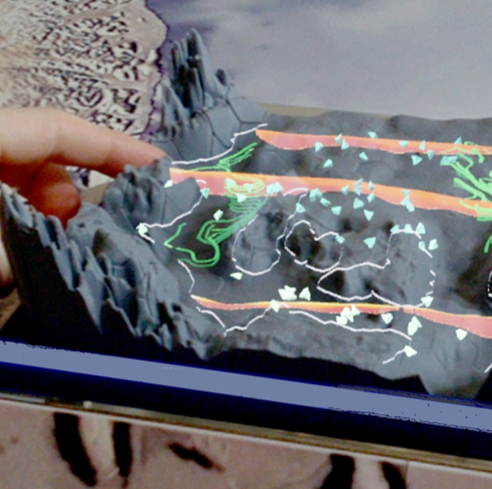

Data physicalizations (3D printed terrain models, anatomical scans, or even abstract data) can naturally engage both the visual and haptic senses in ways that are difficult or impossible to do with traditional planar touch screens and even immersive digital displays. Yet, the rigid 3D physicalizations produced with today’smost common 3D printers are fundamentally limited for data exploration and querying tasks that require dynamic input (e.g., touch sensing) and output (e.g., animation), functions that are easily handled with digital displays. We introduce a novel style of hybrid virtual + physical visualization designed specifically to support interactive data exploration tasks. Working toward a “best of both worlds”solution, our approach fuses immersive AR, physical 3D data printouts, and touch sensing through the physicalization. We demonstrate that this solution can support three of the most common spatial data querying interactions used in scientific visualization (streamline seeding, dynamic cutting places, and world-in-miniature visualization). Finally, wepresent quantitative performance data and describe a first application to exploratory visualization of an actively studied supercomputer climate simulation data with feedback from domain scientists.

Publications

- @article{10.1145/3488542,

- author = {Herman, Bridger and Omdal, Maxwell and Zeller, Stephanie and Richter, Clara A. and Samsel, Francesca and Abram, Greg and Keefe, Daniel F.},

- title = {Multi-Touch Querying on Data Physicalizations in Immersive AR},

- year = {2021},

- issue_date = {November 2021},

- publisher = {Association for Computing Machinery},

- address = {New York, NY, USA},

- volume = {5},

- number = {ISS},

- url = {https://doi.org/10.1145/3488542},

- doi = {10.1145/3488542},

- abstract = {Data physicalizations (3D printed terrain models, anatomical scans, or even abstract data) can naturally engage both the visual and haptic senses in ways that are difficult or impossible to do with traditional planar touch screens and even immersive digital displays. Yet, the rigid 3D physicalizations produced with today's most common 3D printers are fundamentally limited for data exploration and querying tasks that require dynamic input (e.g., touch sensing) and output (e.g., animation), functions that are easily handled with digital displays. We introduce a novel style of hybrid virtual + physical visualization designed specifically to support interactive data exploration tasks. Working toward a "best of both worlds" solution, our approach fuses immersive AR, physical 3D data printouts, and touch sensing through the physicalization. We demonstrate that this solution can support three of the most common spatial data querying interactions used in scientific visualization (streamline seeding, dynamic cutting places, and world-in-miniature visualization). Finally, we present quantitative performance data and describe a first application to exploratory visualization of an actively studied supercomputer climate simulation data with feedback from domain scientists.},

- journal = {Proc. ACM Hum.-Comput. Interact.},

- month = {nov},

- articleno = {497},

- numpages = {20},

- keywords = {tangible user interfaces, mixed reality, data physicalization}

- }

(2021).Proc. ACM Hum.-Comput. Interact. vol. 5 no. ISS

(cite)

Sculpting Visualizations © 2021